Like most people in technology industries, the majority of my time lately has been taken up with all things AI. I'm fascinated with all of the open models that are free to download and can run locally on my own computer.

Finally, the power of the cloud in my own hands 🧛♂️ . Muwahahahaha... ahem...

The problem is that the subject is exploding and diversifying at an alarming rate, large language models were just the beginning... now we have text to image, image to 3D, image to image (for style replacement), ControlNets (control over poses, compositions, and spatial consistency), in-painting and out-painting, voice to voice cloning, text to video, and a plethora of LORAs (LOw-Rank Adaptations) that let you apply fine-tuned tweaks over a much larger pre-trained model, and seemingly infinitely more weird and wonderful applications pop up every day.

I've probably said this about me before, but I think learning is actually fun, there's always been a persistent background craving in my head that's wanting to know more. I enjoy understanding how something works and it gives me much more of an appreciation and sense of wonder than just using it and seeing the results. But once I start looking into things, it can turn into an endless rabbit hole, it's hours on YouTube, it's reading articles and listening to audiobooks, it's... well, it's just turtles all the way down 🐢.

Artificial Intelligence isn't a new thing despite it's explosion in recent years, so I wanted to do a bit more than just scratch the surface, I wanted to explore, I wanted to truly understand, I wanted to be able to explain. And even with the relatively small amount so far, I'm pretty sure I could qualify as an archaeologist with the amount of digging I've been doing 🦴.

So let me take you back to the beginning, to the most basic aspects that underpins the foundations, that supports the base, on which there is a platform, that modern generative AI stands on. Yes it goes that deep.

This series is for anybody that wants to dive head first into this re-emerging fast paced world. The vast majority of the concepts we'll cover will likely be around text, but it'll be similar concepts for images, audio, video, 3D models, etc. Hopefully you'll see as we start to get through it all.

Where to start?

There's so many people, technologies, and breakthroughs across scientific, mathematical, and biological papers that have come together to make this possible. I have found it very difficult to know where to begin because almost every article I found came with a certain level of assumed knowledge that I just didn't have. It's like you need an instruction manual to understand the quick start guide. The more layers I found, the more I wanted to know what's under the hood.

So let's start with the simplest concept shall we 😀...

Replicating A Brain

Nothing quite like picking one of the most complex thing known in existence to start with😅. But this is really what it's all been about, replicating how our brains learn facts, process thoughts, recall memories, and how they makes decisions.

In other words, how does all of those seemingly instant electro-chemical reactions, that whurr away incessantly in my body's penthouse suite, result in actual comprehension and decision making including speech, movement, self-preservation, etc.

Not sure about you, but I think that's far too big of a challenge to tackle in one go... Let the first of our many breakdowns begin!

Artificial Neural Networks

The heart of the question (anatomy pun intended), is asking how neurons tie together to create a network that converts input signals into activations which then feed into other connected neurons, and on and on, until it turns into something meaningful at the other end.

ANNs are a computer's implementation of interconnected neurons, but before we get into their structure, how did they come about?

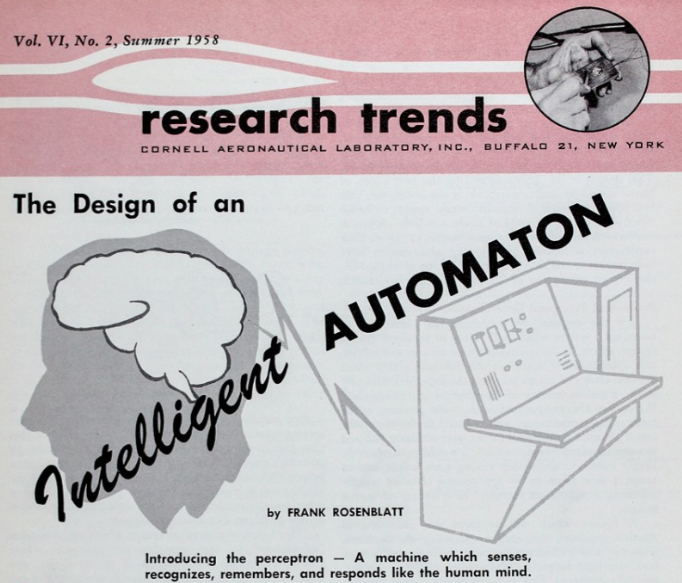

The Perceptron

In the late 1950s Frank Rosenblatt was working at Cornell Aeronautical Laboratory where he came up with an algorithm for a 'binary classifier'. He intended this to be built as a physical machine to identify images.

I think the following quote sums up the endearing naivety, yet incredibly optimistic vision he had... if only he knew that 60 years later, underpinned by the work he started, it would happen in such an unprecedented way!

“Stories about the creation of machines having human qualities have long been a fascinating province in the realm of science fiction. Yet we are about to witness the birth of such a machine - a machine capable of perceiving, recognizing and identifying its surroundings without any human training or control.”

Frank Rosenblatt - 1958

The first test performed was to classify pieces of paper that had a cross marked on them in random locations, into whether the cross was on the left or the right of the page. This was quite a feat at the time, despite how mundane it sounds today. Bear in mind it wasn't programmed to do this, it learnt the skill.

The Perceptron was a single layer artificial neural network, but instead of getting caught up in how it classified images, let's walk through something more basic... Breakdown number two!

Classifier

In the context of Machine Learning and Artificial Intelligence, a classifier is a algorithm that can classify inputs into various distinct outputs which are called classes, hence classifier. Answering questions like "Is this a tree" where the answer is only either a yes or a no, or "Is this a car, van, or bike?" where the answer is always one of those three distinct options.

But how do you provide this (whatever it may be) as an input to an algorithm, one way could be to describe some basic traits; for example "What has 4 legs, fur, a tail, and barks"... the answer is obviously "dog", but why is it obvious? What inputs has your brain processed for you to quickly and hopefully easily 'classify' the input (the description) into a specific output (the type of animal).

So let's say we want our classifier to learn to identify dogs (represented by a positive output value to mean 'true'), otherwise it should be 'not-dog' (and output a negative value = false). This would be called a 'binary classifier', because it has two output states, true or false, one or zero, A or B, dog or not-dog - that's it.

Perceptron Algorithm

Inputs & Weights

Let's start with a set of discrete inputs that represent specific animal characteristics or traits. To say that the animal has a specific trait the corresponding input is turned on by setting it to a positive value such as 1, if it doesn't have that trait the input is turned off by setting it to a negative value such as -1.

Each of the inputs has an associated weight value, so each input is multiplied by its own weight. We will use weights such as 1 or -1 as it makes the math a bit easier here.

I think it's important to point out that there isn't really any rules about how large the number is, we'll stick to positive and negative 1, as I think it's easier to visualise adding and taking away points to end up with a score. But you can imagine that certain traits may be more telling compared to others, most animals have fur (small weight), but not many of them bark (larger weight).

A dog trait that is turned on is a good thing (dogs usually have fur), the same as how a not-dog trait that's turned off is also a good thing (dogs usually don't quack). And the opposite of those are both bad things for our doggy classifier since we are hoping for a positive dog result (dogs don't usually have 2 legs, nor do they have feathers).

So by setting a negative weight for the inputs we expect to be off when the animal is a dog (i.e., 'two legs' or 'feathers'), we end up multiplying the "input × weight", which is "-1 × -1" and the answer is 1. Because whenever you multiple a negative number by another negative number, the answer is always positive.

- quack is off, therefore the input is -1

- the weight for quack is -1

- -1 × -1 is 1, and positive results means it might be a dog = good

If the input should be on, and it is, therefore it's set to 1, then a positive weight means we'll also get a positive result. This is the trick... multiplying the input by a weight, allows us to use the same formula and we'll always get a positive value when the input is in it's desired state for a dog, regardless if that desired state of the input is on or off. And that's also why we don't use zero to represent off, since zero multiplied by anything is zero, we would never get a positive or negative result from an input that is zero.

- tail is on so "1 × 1 = 1". And a positive value means it might be a dog

- quack is off so "-1 × -1 = 1". And again, positive, so it might be a dog

- fur is off so "-1 × 1 = -1". The negative result means it might not be a dog

- two legs is on = 1 × -1 = -1 = Also might not be a dog

Essentially, the idea is when you add up all the results, you will get a higher positive number when all the traits match what a dog has (on or off), and a lower negative number if they do not match the traits a dog has.

That felt was very repetitive to type out, so I think I've successfully hammered the point home well enough, it's an important one to remember in all of this:

The weights are there to amplify each of the inputs based on whether it's expected to be set to on or off.

Activation

Well done, you've now got a bunch of input values and weights that you've multiplied with each other and then added all the results together... so what? What does that help us understand?

Well, in itself, nothing really, what's missing is a way to determine if the output is enough to call it a dog, or not. This is called activation, and describes the general approach that determines how the results we've got so far triggers or activates this fake neuron in our artificial neural network.

The perceptron used a 'step activation' method, which is basically a threshold switch. It means when the sum of all the inputs multiplied by their weights is greater than zero, it should activate (i.e., the output is dog), if it's lower than zero it should not activate (i.e., not-dog). And nothing else, the answer steps between not-dog and dog.

There are many other approaches for activation that we will touch on later.

However, there is a slight problem because our 'dog classifier' may produce a result of 3 for a fox because it shares a lot of traits with a dog, but for an actual dog the output might be a 5. Both 3 and 5 are positive values, and we don't want to classify them both as dog when one of them isn't. What we need to do is to be able to set the threshold. And that's where a bias comes in to save the day.

Bias

There is one Bias for each layer of weights, and it's used to shift the final result up or down. So if we set the bias to -4, then the fox that gave us 3 would become -1 which is below zero and therefore the output is not-dog. And the dog that gave us a score of 5 last time would now be 1, and since that's above zero, that gets us where we need to be.

Because a 'step activation' is used, we don't really care what the value is, we only care if it's positive or negative. Therefore anything above zero should trigger the activation and be classified as dog. An output of 0.0001 is the same as 999 as far as we're concerned. All breeds of dogs, even a little mexican hairless would still be dog despite the 'fur' input being set to off, but there's enough of the other dog traits to make the final result a positive value.

Training

Up till this point we've set our doggy classifier weights based on what the inputs were. And when you know what each input represents, it's easy enough to set the weights yourself (i.e., -1 for quack, +1 for fur). But then that wouldn't be learning would it, it would be programmed.

What if you have no idea what any of the inputs actually represent? How should you go about getting our 'dogceptron' (get it? doggy-perceptron) to learn what the weights should be?

Well, I'm glad you asked... we train it - the clue was in the heading for this section.

Supervised Learning

A perceptron is trained using a 'supervised learning algorithm'. That means the training data is well labeled. To be well labelled means you need to know that the inputs are set to their desired state that represents dog. You don't have to know what each specific input represents, you just need to know if what you are inputting is a dog or not.

To see what I mean, instead of a small set of on/off switches for each trait, scale that idea up to a photograph where each input could be the colour of each pixel. There could be thousands or even millions of inputs! So you should hopefully see why it's important to be able to train these things without really knowing what each of the inputs represent.

To train our dogceptron we'd have to prepare a set of known animal inputs i.e., the specific sets of attributes for a cat, mouse, duck, etc. And each time they are set, you would adjust the weights and/or bias either up or down, based on if the output is correct or not.

All of the weights should be adjusted by the same overall amount each time, which could be a constant value, or it could be based on how wrong the output was. The process of adjusting the weights based on the correctness of the output is called 'backpropagation'. If you are presenting a dog, each of the inputs that are turned on, you would increase the weight associated with it by the above amount, and for the inputs that are off, you would decrease the weight by the above amount. If it's some other animal you would do the opposite. But if the output is correct, you don't have to adjust any of the weights.

That's it, you've just completed your first training step for your very first AI, it's not likely to be right about anything just yet, for it to get better, you need to train it more, and more... again, and again, and again. Each time nudging the weights in the right direction should cause the output to converge so it provides you enough correct results to satisfy your needs, hopefully 100% of the time.

I was just double checking some of the details and stumbled across another post also using the dog vs not-dog for their examples. I promise I didn't steal the idea, I guess there's other people out there that think like me, not sure if that is a good thing or bad 🤪.

Now let's knock it up a notch, there's still a lot more to go through and I'm dilly dallying in the details. Back to the history lesson...

Training the original Mark I Perceptron

The Mark I Perceptron (the name of first machine made using perceptron algorithm) had 400 photocells as inputs arranged in a 20 by 20 grid. It was trained on 10,000 images of circles or squares through a single layer of 500 neurons (weights). After training, it correctly determined which shape it was correctly 99.8% of the time - regardless of the position or orientation of the shape on the page.

A later experiment increased the layer up to 1000 neurons (weights) and was trained using only 60 images of squares and diamonds. This time it was right 100% of the time. It was also trained on as few as 20 images of the letter E and X, and also achieved 100% success rate even if the letters were rotated up to 30°!

Perhaps that doesn't blow your mind... Just remember this was happening in the 1950s, and some geek in a lab created a robot that could look at a sheet of paper and tell you what shape it was. Pretty amazing back then. So amazing in fact that Photo Division of the CIA were very keen to study if it could be used to quickly and accurately classify military-shaped silhouettes (such as planes and ships) from aerial photographs.

To me, the most incredible part of all this (which was inspired by biology), is that the photocells (called the sensory units or S-units) were not wired up 1:1 with the weights (association units or A-units), they were connected.... randomly!

Yes, literally randomly. Each weight had a random handful of inputs wired to it, some overlapping, some not.

The Perceptron Problem

However, there is an issue.. and it's quite a big issue. Any single layer perceptron only works when the classification of the inputs can be separated in a linear fashion, this is a little hard to picture with our dog example having so many inputs.

But try to imagine all of the possible values spread out onto a flat two-dimensional graph, to trigger the 'step activation' you draw a straight line across the graph in a way which separates the points so they are either side of it, one side of the line would always be classed as dog, and everything on the other side would be not-dog.

If you can't separate all of the points into your two classes with only a single straight line cutting through them, then you'll be constantly alternating between adjusting the same weights up and down and the output would not converge with each round of training (in other words, the accuracy of the output will not increase beyond a certain point, if at all).

There are better examples of this linear separation problem, but I like dogs, and the other examples I've seen are far to 'mathy'... so I chose dogs... whether or not it actually works mathematically, just assume that it does and we can get on with our lives.

The solution? No, do not give up... Considering that a single layer worked so well (when it worked), shall we just double-down and introduce more layers?

With additional layers taking their inputs from their previous layer, you can draw more than just a single straight line through your points on the graph.

Recap

I think that's enough for today, a good point to break before we drop deeper into this. I hope you enjoyed this little starter session, here's a quick recap:

- Inputs are multiplied by weights: this allows us to adjust the value of the input to a desired state that is positive or undesired state that is negative.

- A bias value allows the result of that layer to be shifted up or down so that it can be used in various types of activation functions where some favour being centered around zero, and it's unlikely that an unbiased result from a layer would naturally do that.

- A single layer only allows for a linear separable dataset, to have a more complex scenario you need more layers.